Research

- My Ph.D. Annual Review Presentation Slides 1st year 2nd year

- My Thesis Proposal Presentation on Youtube /on Bilibili /Slides

- My Dissertation Presentation on Youtube /on Bilibili /Slides

- My Research Statement and Teaching Statement

Categories

- Intelligent Mobile Text Entry Interactions 📱

- Ubiquitous Text Entry 🙌

- Evalutating Text-based Communication Systems 🧐

- Assistive Digital Communication Systems 👨🦯

- Talking with Smart Assistants 🤖

- Digital Information Wellbeing 🧘🏻

- MISC 💡

Intelligent Mobile Text Entry Interactions 📱

| The ACM CHI Conference on Human Factors in Computing Systems (CHI), 2021 Video My presentation PhraseFlow is a phrase-level input keyboard that is able to correct previous text based on the subsequently input sequences. For example, if the user types "I love in Seattle", the keyboard will correct "love" to "live" after it sees "in Seattle". The project evaluated the usability of PhraseFlow and attempted to design a pracitcally usable phrase-level keyboard.  |

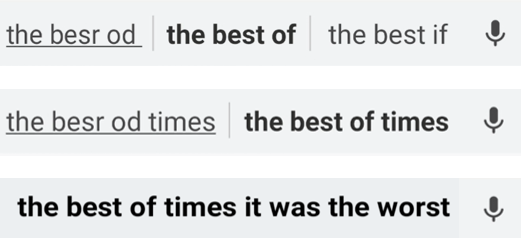

| The ACM Symposium on User Interface Software and Technology (UIST), 2020 Demo Wenzhe's presentation JustCorrect is a post-correction technique for smartphones, sharing the same genre with Type, Then Correct. JustCorrect utilizes the word embeddings and language models to detect the error and display correction options automatically.  |

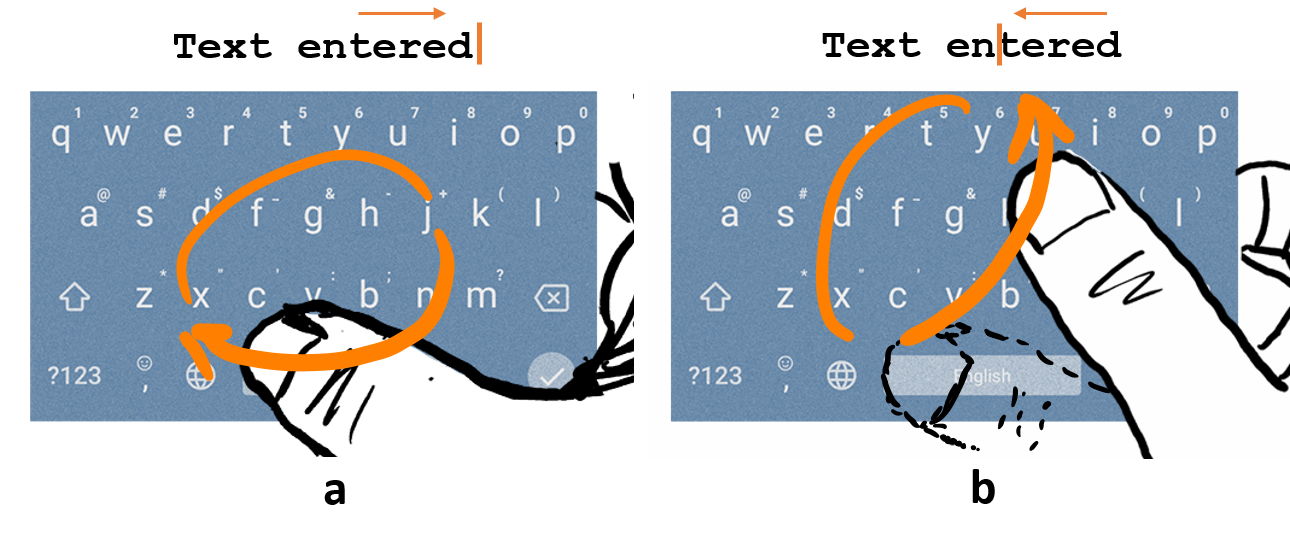

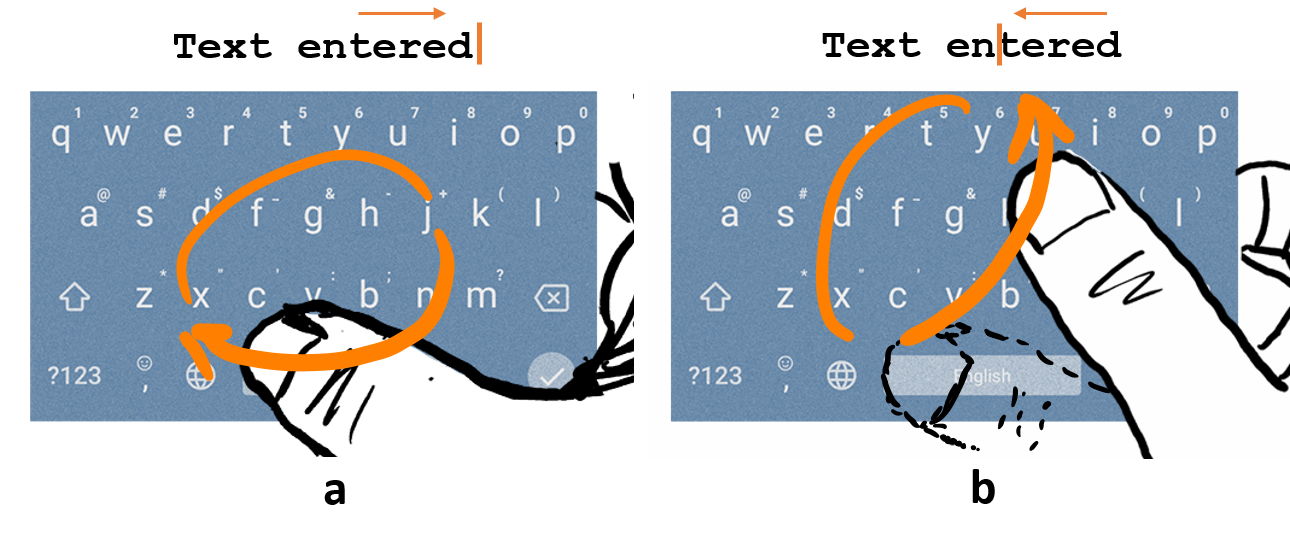

| Proceedings of Graphics Interface (GI), 2020 Video My presentation We proposed a set of on-keyboard text editing gestures for the mobile keyboard, similar to the desktop keyboard shotcuts, including ring/letter/swipe gestures to facilitate fast editing tasks such as cursor-moving/copy/paste/cut/undo. Gedit provides one- and two-handed operation modes, and is also compatible with the gesture typing input.  |

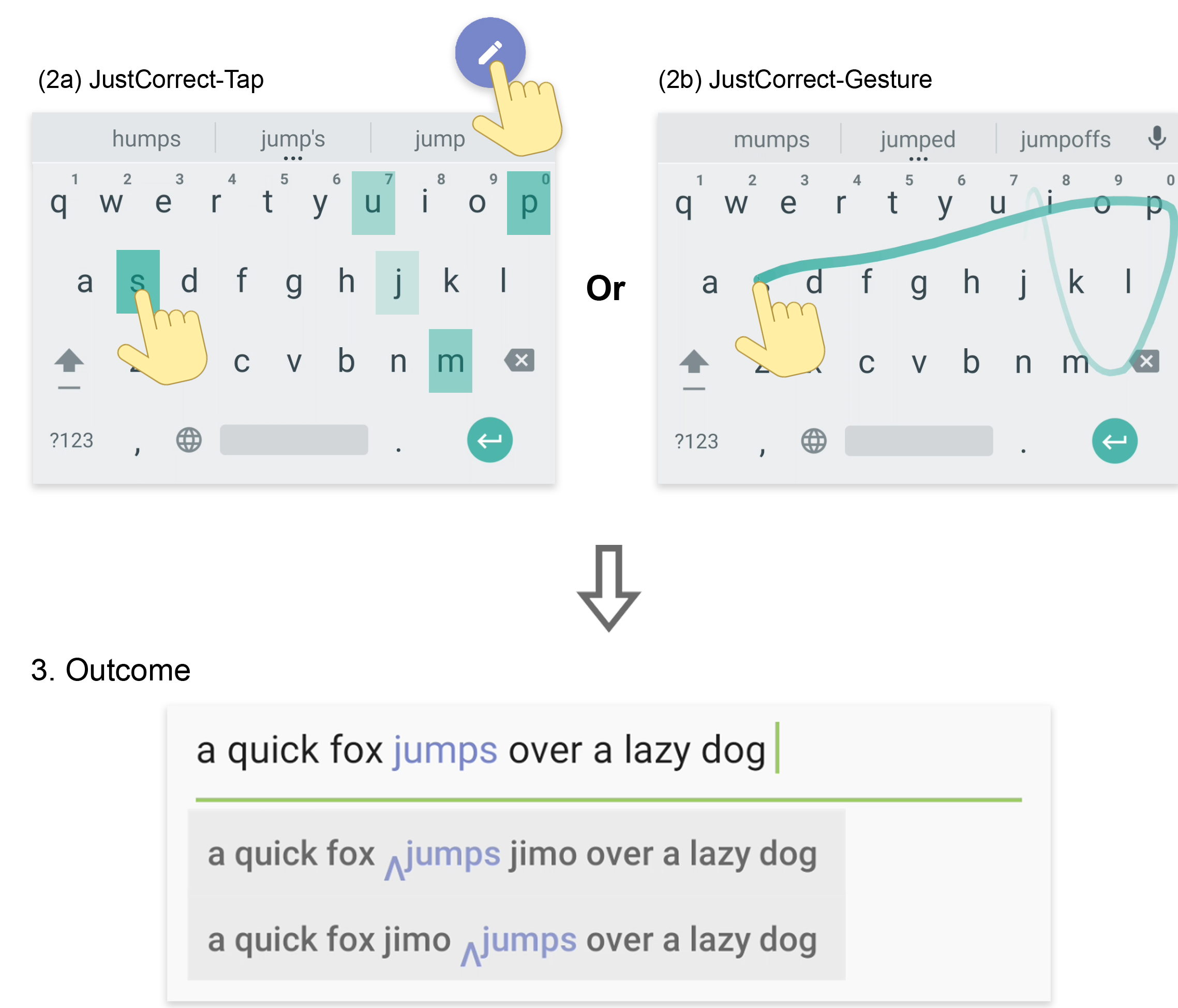

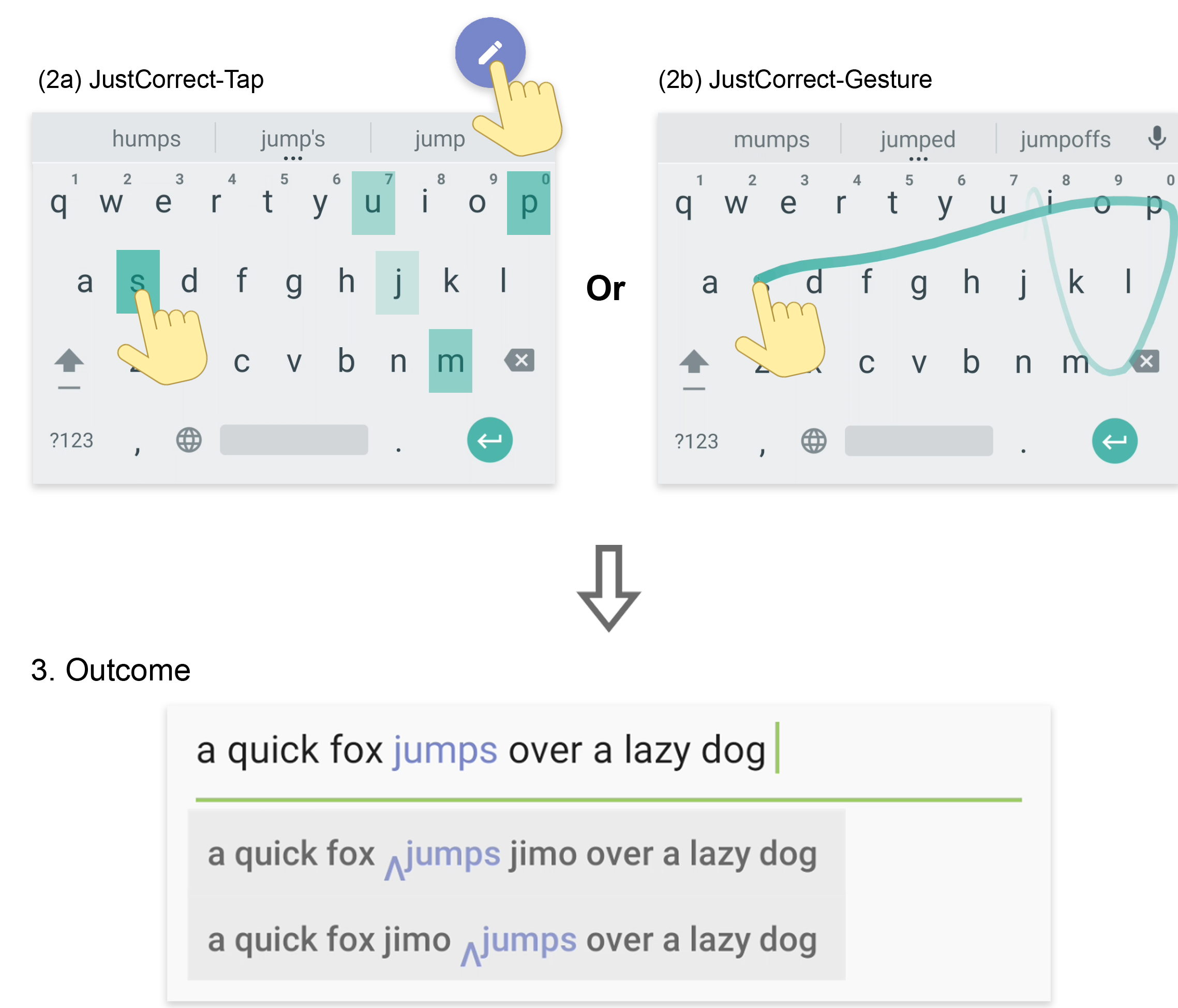

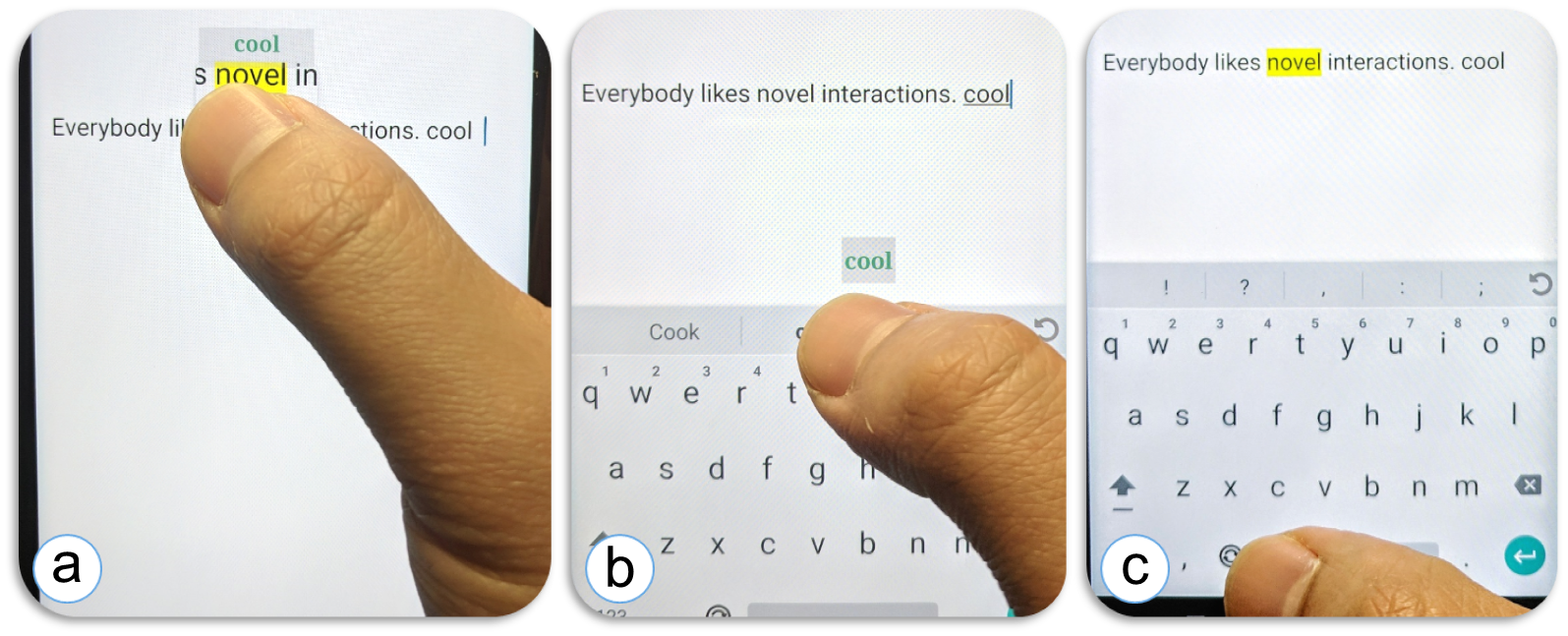

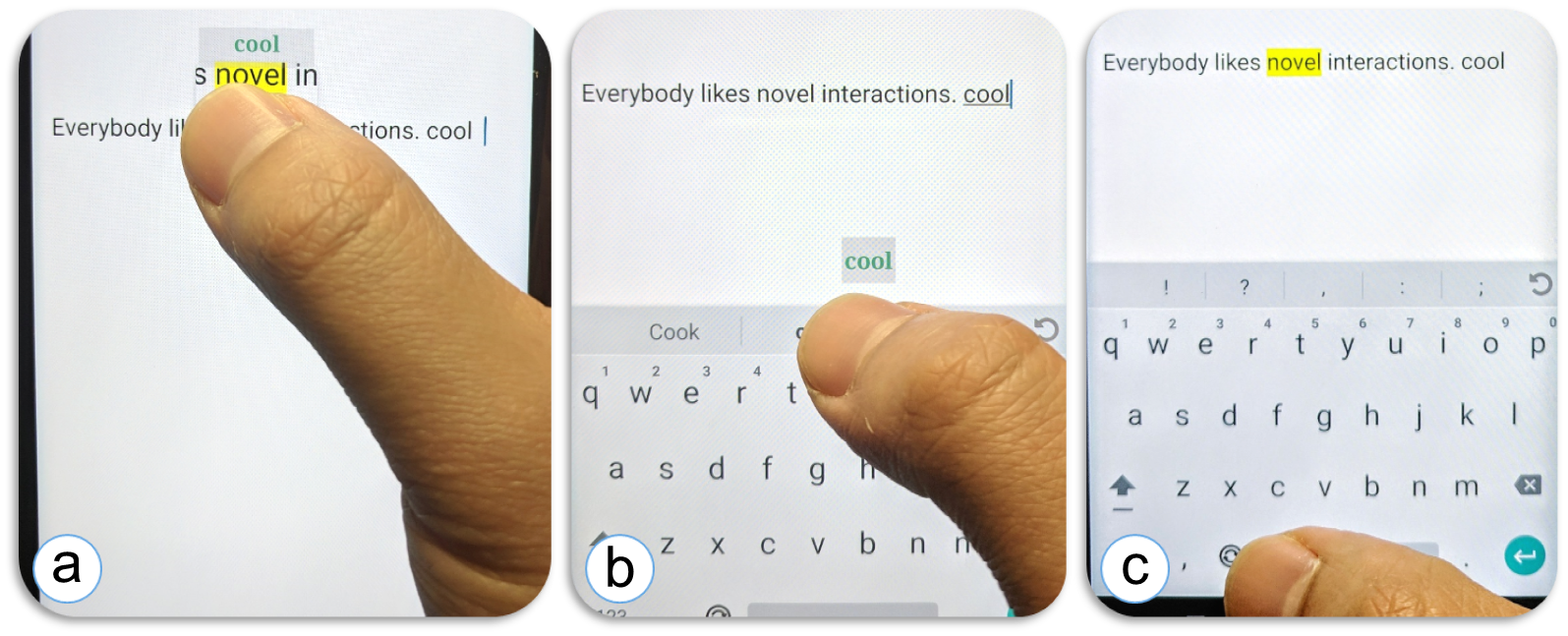

| The ACM Symposium on User Interface Software and Technology (UIST), 2019 My Presentation Demo Project page Instead of normal touch+cursor based correction process, why cannot we rethink of the correction interaction? In this paper, we present three novel interactions that allow the user to type the correction first, then apply it to the error place. Furthermore, we applied deep learning technology to enable automatic error detection for the interaction. Our correction RNN model |

Ubiquitous Text Entry 🙌

| The ACM CHI Conference on Human Factors in Computing Systems (CHI), 2022 Demo My presentation Project page TypeAnywhere is a QWERTY-based text entry system for off-desktop computing environments. Using a wearable device that can detect finger taps, users can leverage their touch-typing skills from physical keyboards to perform text entry on any surface. The average performance was 59.7 WPM (84.8% of the physical keyboard speed).  |

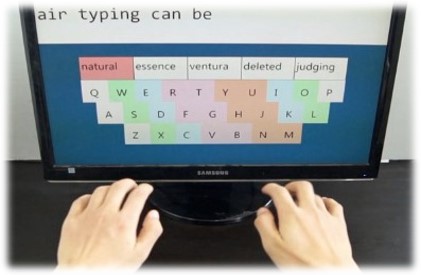

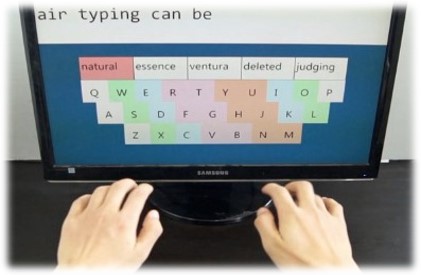

| The ACM Symposium on User Interface Software and Technology (UIST), 2015 Demo A novel air-typing method, Leapmotion tracking fingers, improved Bayes prediction model with application developed. Users reached the speed of 29.2 WPM on average. |

Evalutating Text-based Communication Systems 🧐

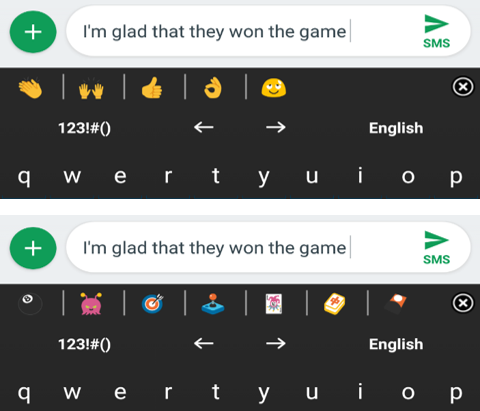

| iConference, 2021 My presentation We compared how the lexical based and semantic based emoji suggestion mechanisms affected the online chatting experience through an in-lab study and a field deployment. The results showed that the suggestion system of emojis did not influence the chatting experience, and users enjoy using both suggestion systems for different reasons.  |

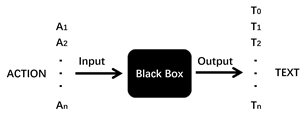

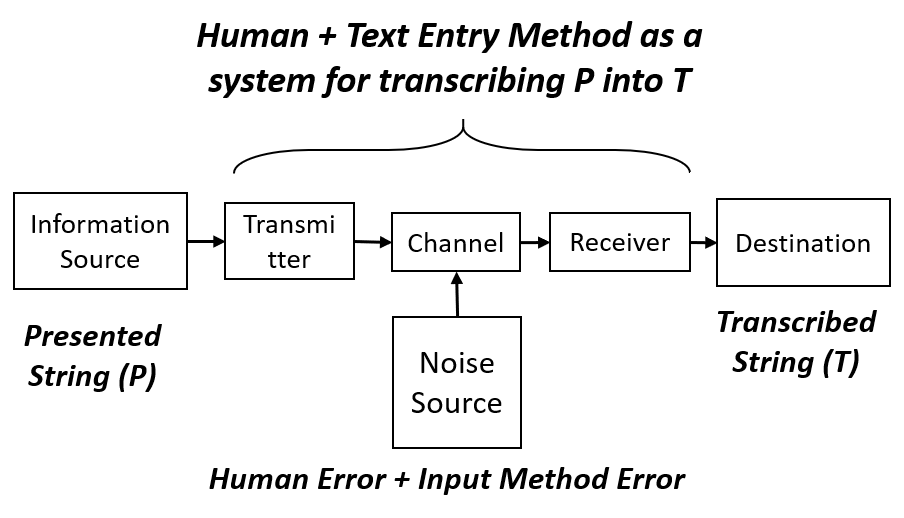

| The ACM Symposium on User Interface Software and Technology (UIST), 2019 Demo In this work, we present a new underlying model that supersedes the input stream model for general-purpose method-independent character-level text entry evaluation. Specifically, we present an approach that replaces the input stream with transcription sequences, or “T-sequences” for short. In brief, T-sequences are snapshots of the entire transcribed string after each text-changing action is taken by the user. Every pair of successive snapshots are then analyzed to compute character-level text entry metrics. TextTest++ platform  |

| The ACM CHI Conference on Human Factors in Computing Systems (CHI), 2019 My presentation Related blog post We define the text entry Throughput as a performance metric combining the speed and accuracy. Throughput is derived from the transmission ratio in the information theory. Unlike other metrics, throughput is less affected by speed-accuracy tradeoffs, thus it enables cross-device, cross-publication comparison. Throughput calculation library |

Assistive Digital Communication Systems 👨🦯

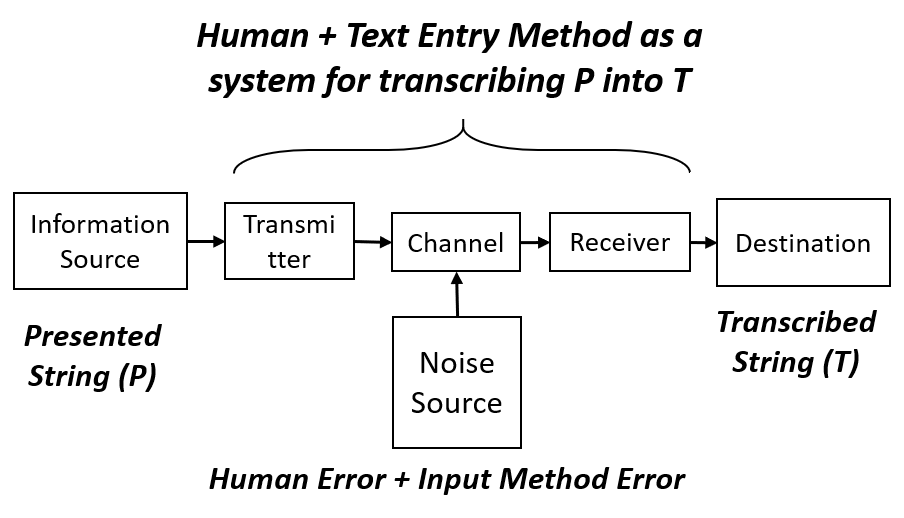

| The ACM CHI Conference on Human Factors in Computing Systems (CHI), 2022 Demo My presentation Project page Ga11y (pronounced as Gally) is an automatic GIF annotation system for blind or low vision users, combining the power of machine and human intelligence. It has an Android client, a remote server and an annotation web interface. For a GIF annotation request, Ga11y first responds with computer vision generated results and uses crowdworkers to improve the annotation quality.  |

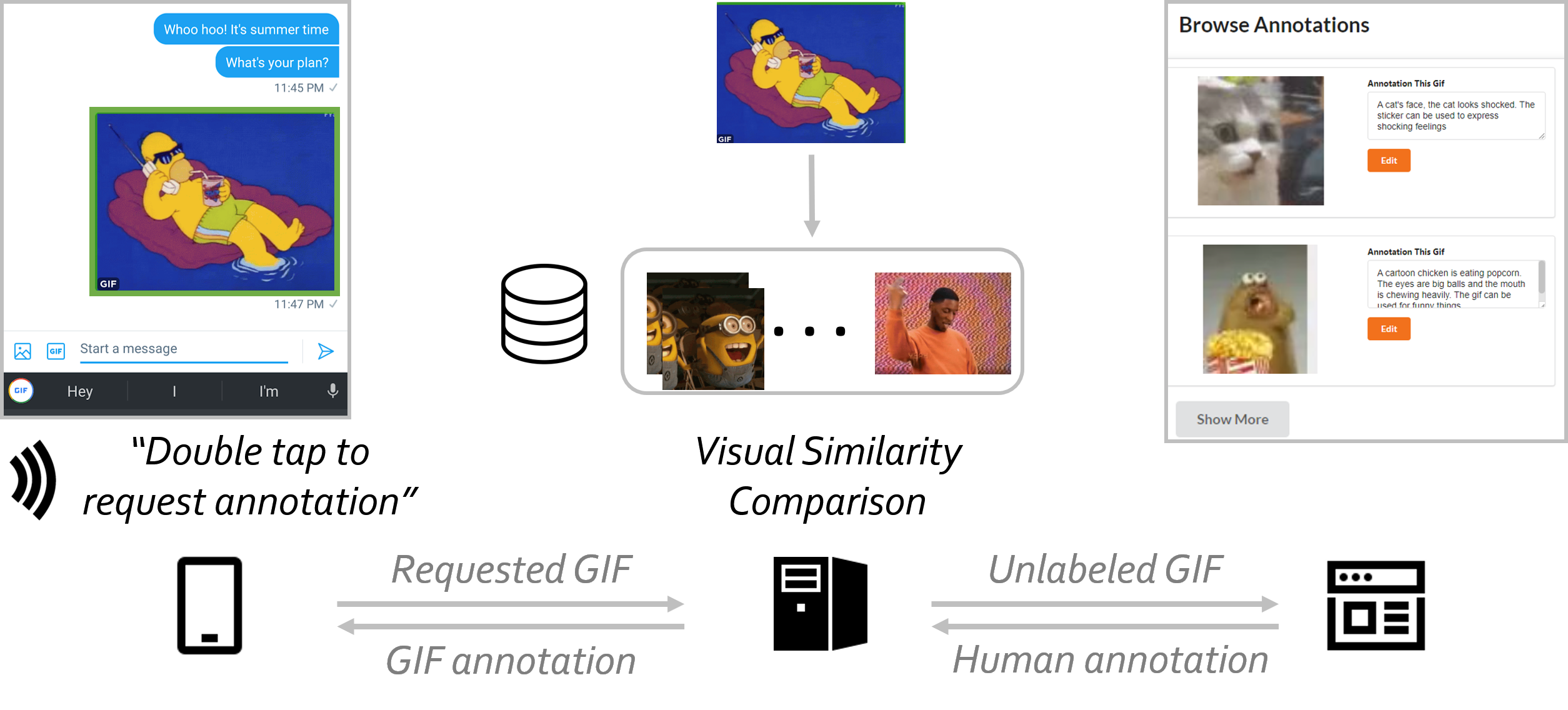

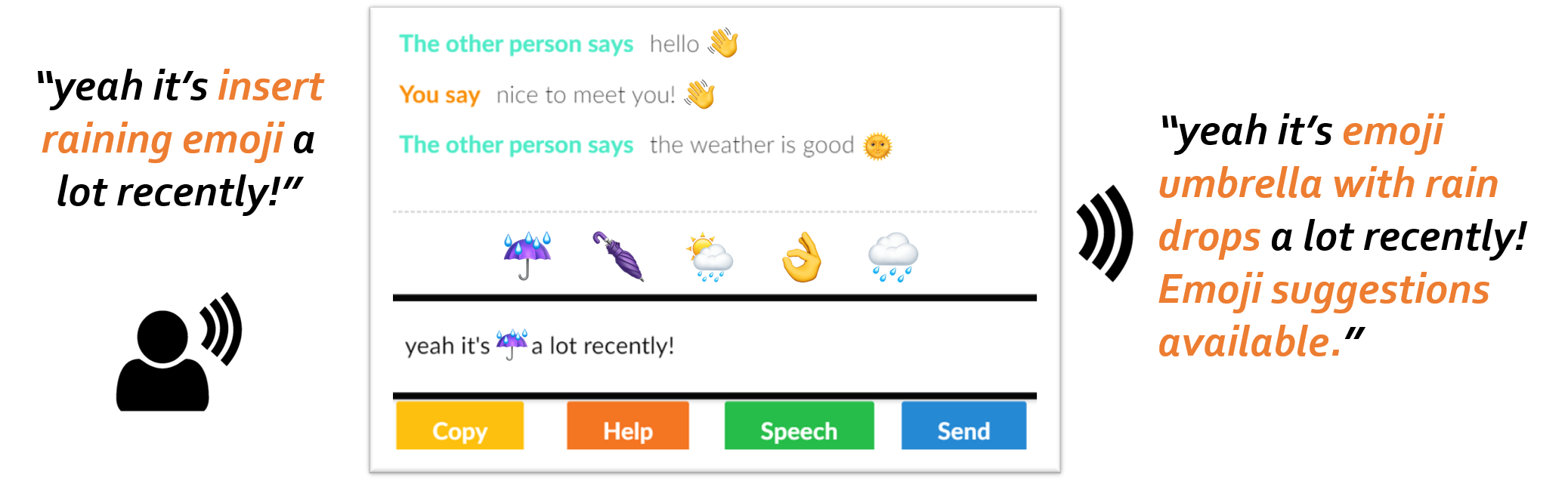

| The ACM CHI Conference on Human Factors in Computing Systems (CHI), 2021 My presentation Related blog post (in Chinese) Project page A speech-based emoji input system, designed for blind or low vision users. Use natural language style emoji query and context sensitive emoji suggestions based on the spoken content. Voicemoji speeds up the emoji entry process by 91% than the iOS keyboard.  |

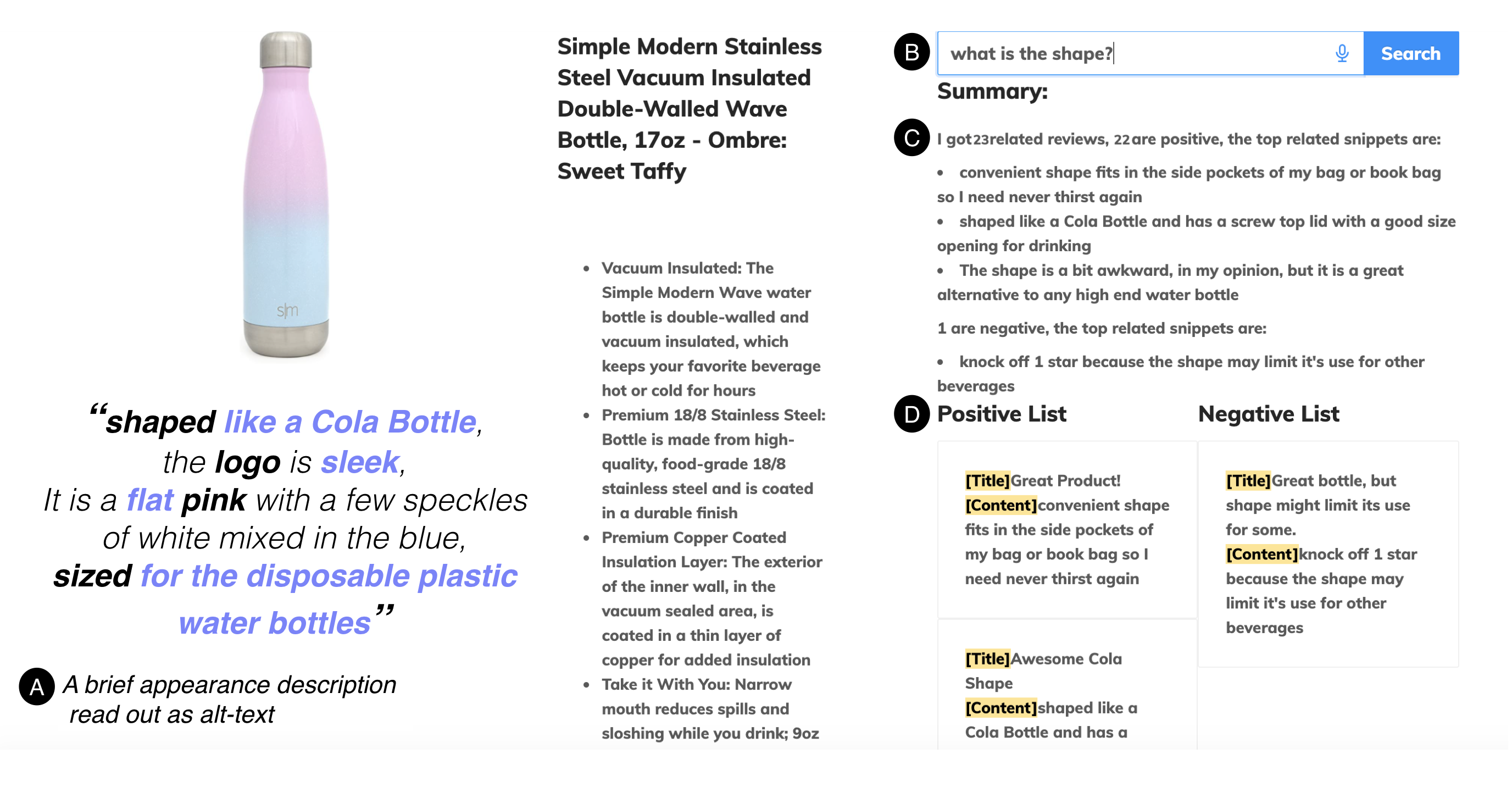

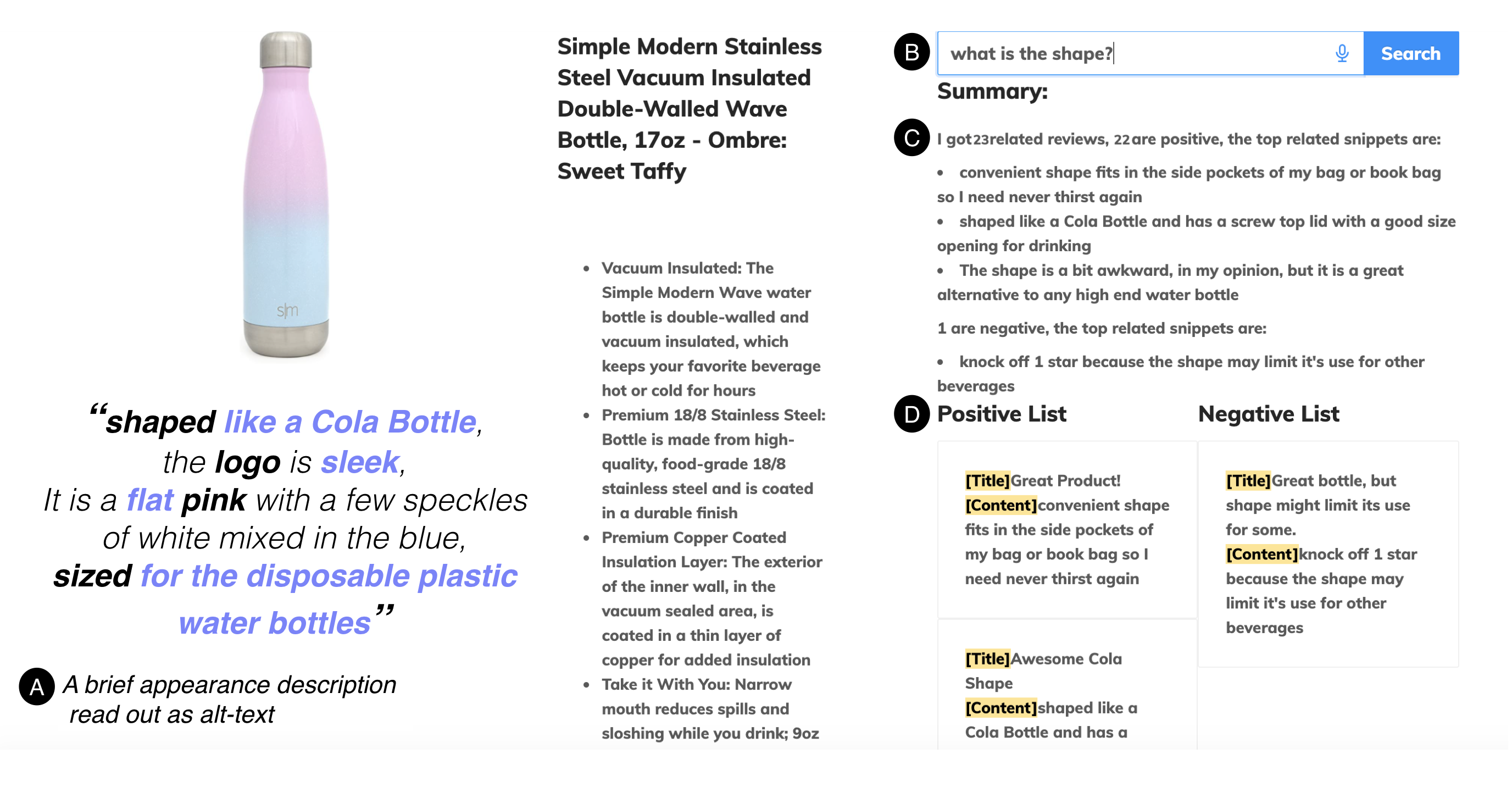

| The ACM CHI Conference on Human Factors in Computing Systems (CHI), 2021 Revamp is a online shopping system aimed to provide simplified experience for Blind and Low Vision (BLV) users. It extracts the user review from the product page using linguistic rules, and generate QA interfaces based on the review data, which provides the visual appearance information of the product. |

Talking with Smart Assistants 🤖

| Proceedings of the 20th annual ACM conference on interaction design and children (IDC), 2021 Related UW News Does hanging out with Alexa or Siri affect the language routine children use to communicate with their fellow humans? In this work, we built two conversational agents, and let them teach the kids a word "bungo" to make the the agenets speak quickly. Although the kids used the word with the agents, they were aware of the social context when facing similar situation with other people.  |

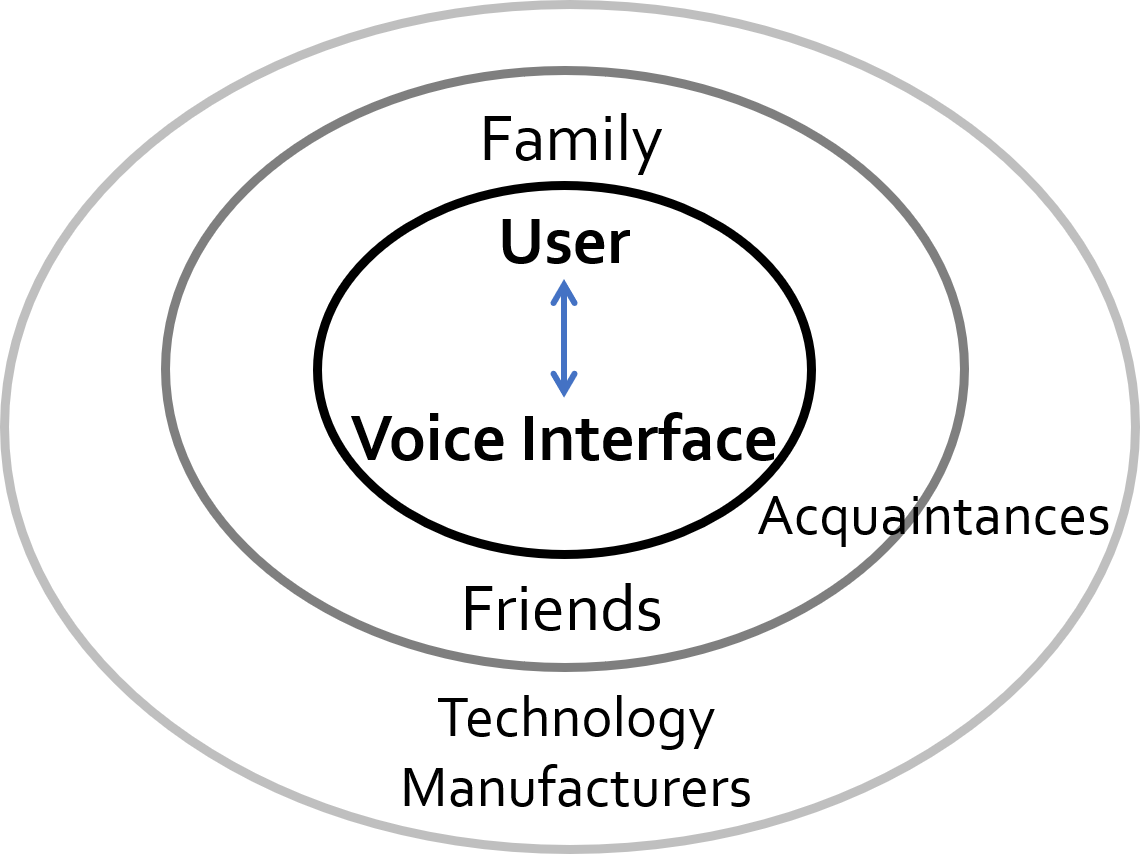

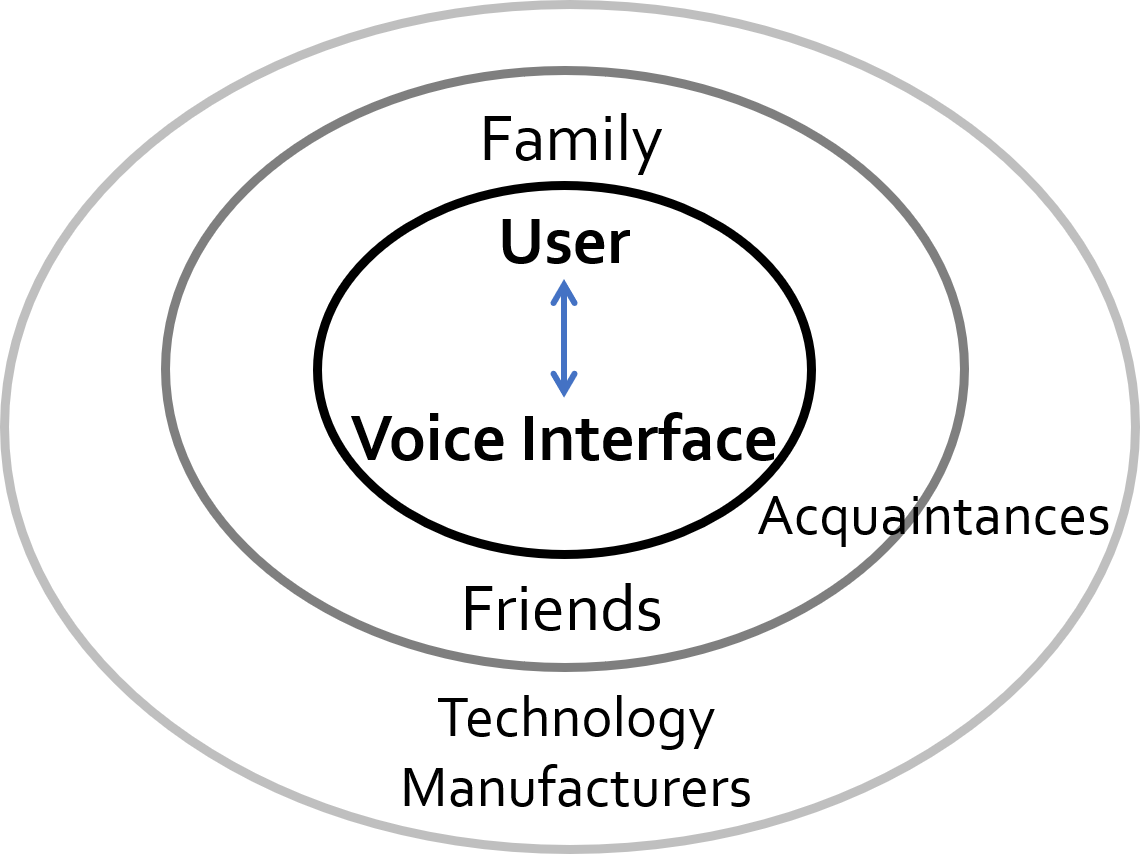

| Proceedings of the ACM on Interactive, Mobile, Wearable and Ubiquitous Technologies (IMWUT), 2020 Through a one-month deployment study, we investigated how families learnt new functionalities of smart speakers, include 1) which features families are aware of and engage with, and 2) how families explore, discover, and learn to use the Echo Dot. Drawing from diffusion of innovation theory, we describe how a home-based voice interface might be positioned as a near-peer to the user and help them discover new functionalities.  |

| The ACM CHI Conference on Human Factors in Computing Systems (CHI), 2019 Erin's Presentation Related Blog Post We investigated different types of communication breakdowns and the repairing strategies between the conversation of family members and Alexa. Our findings indicates that improving technology’s ability to identify the communication partners and to provide specific clarification responses will ultimately improve the conversational interaction experience. |

Digital Information Wellbeing 🧘🏻

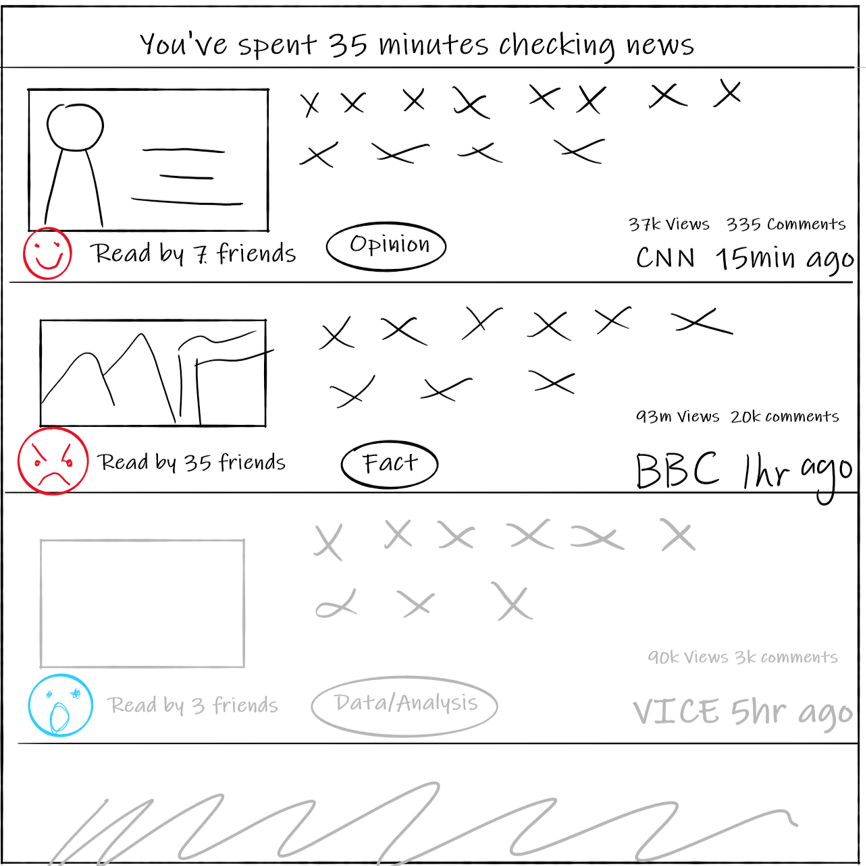

| Preprint, arXiv 2022 We conduced a two-week diary study to investigate how people sought information through digital news platforms, and find that the consumption experience followed two stages: the seeking stage, where participants increased their general consumption, motivated by three common informational needs -- specifically, to find, understand and verify relevant news pieces. Participants then moved to the sustaining stage, and coping with the news emotionally became as important as their informational needs. We also proposed interface designs for improving the consumption experience.  |

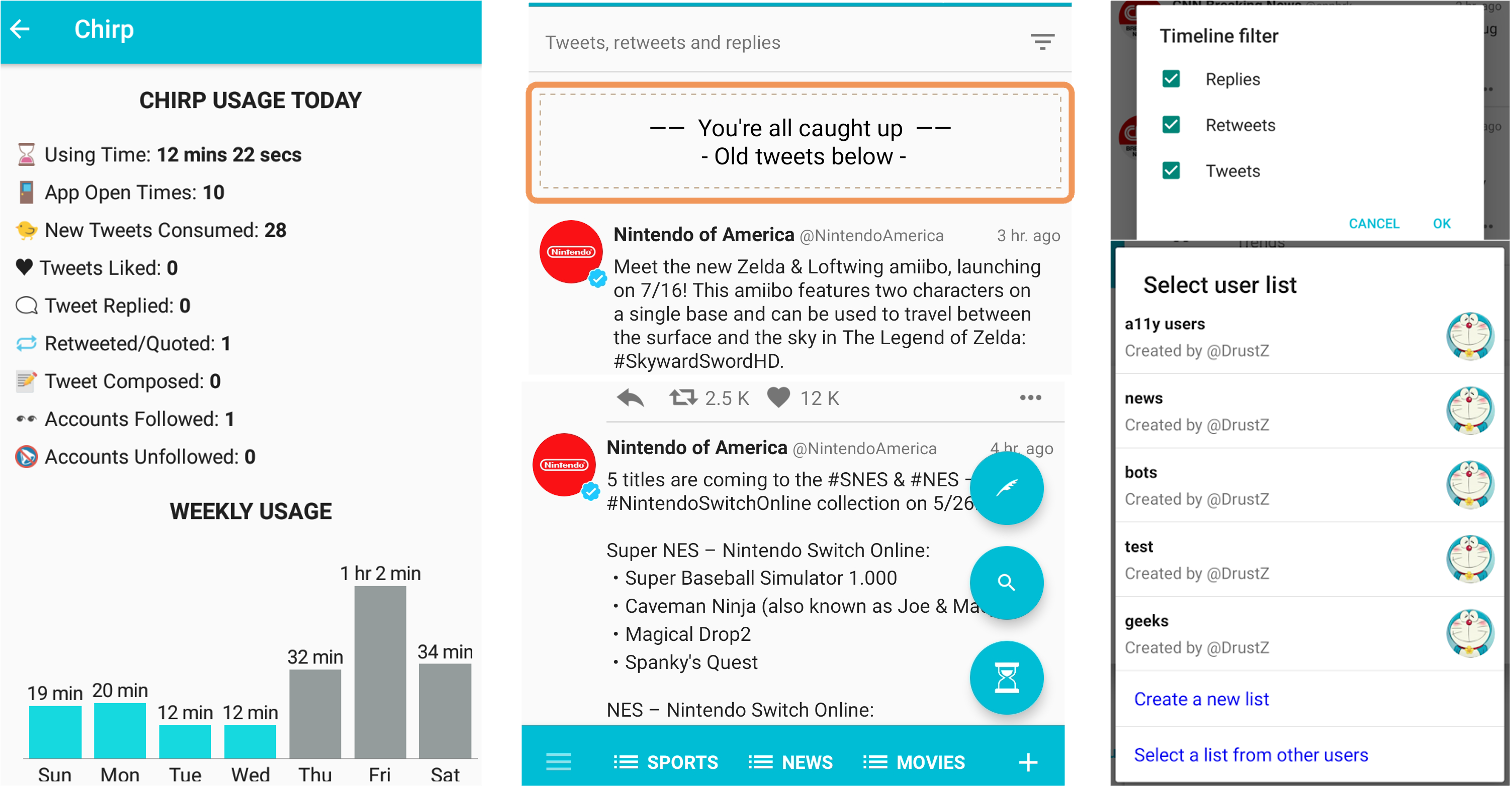

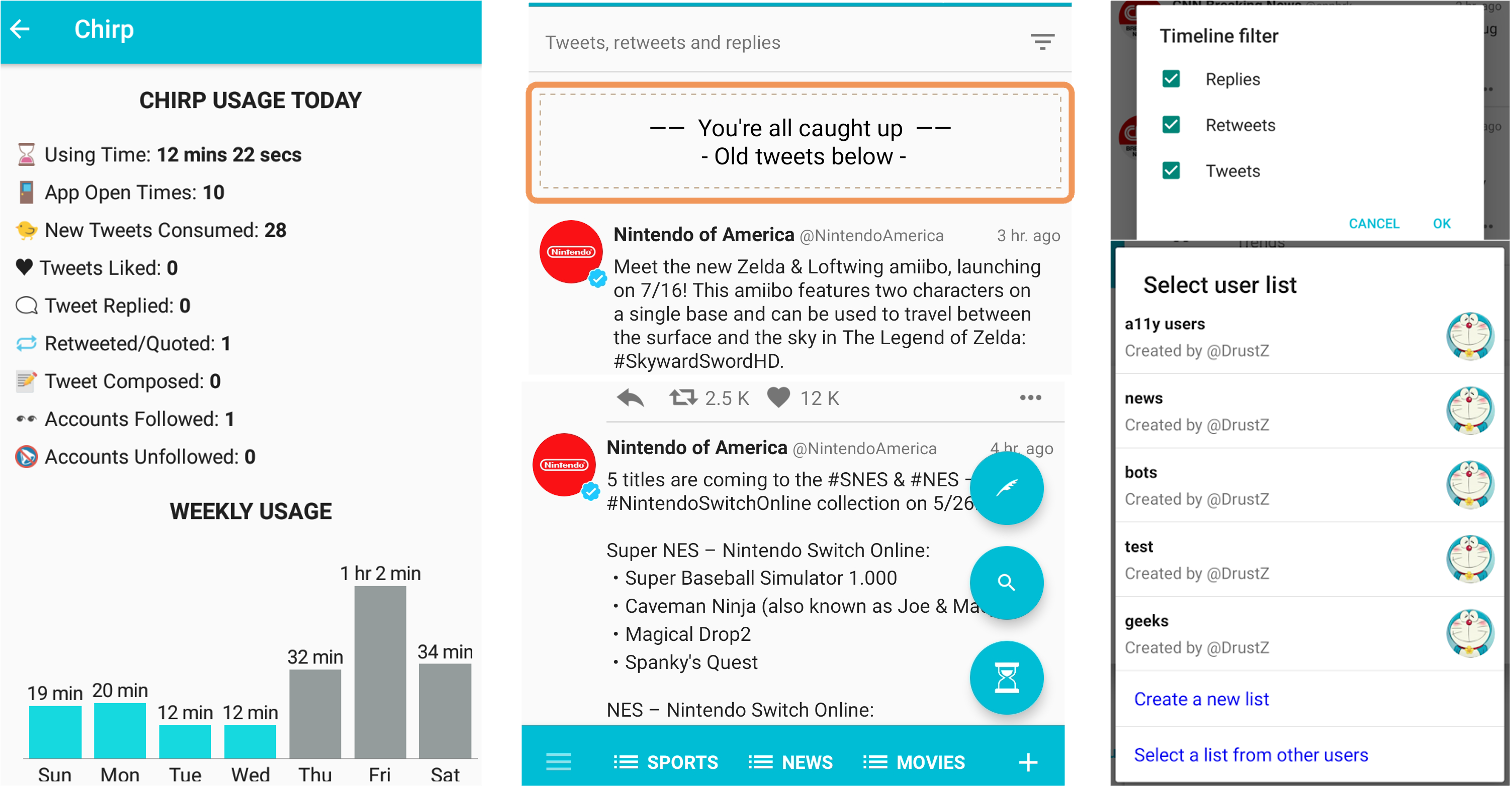

| The ACM CHI Conference on Human Factors in Computing Systems (CHI), 2022 My presentation Project page We design and deploy Chirp, a mobile Twitter client, to independently examine how users experience external (that monitor and limit the app usage) and internal (that change the interface/features of the app itself, such as list, filters and reading clues) supports. Our findings suggest that design patterns promoting agency may serve users better than screen time tools.  |

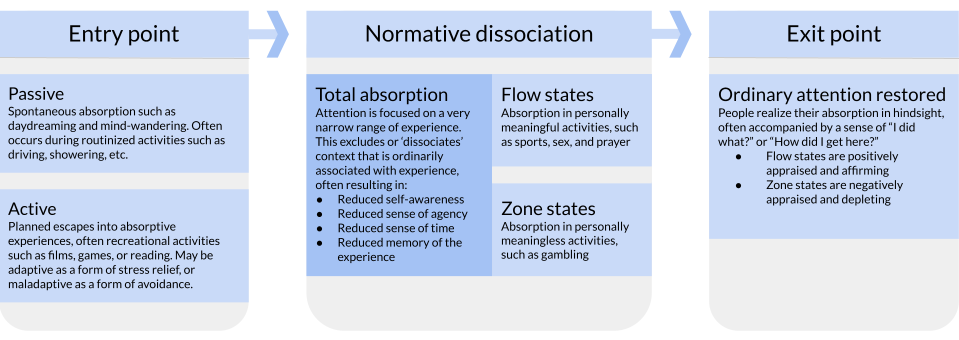

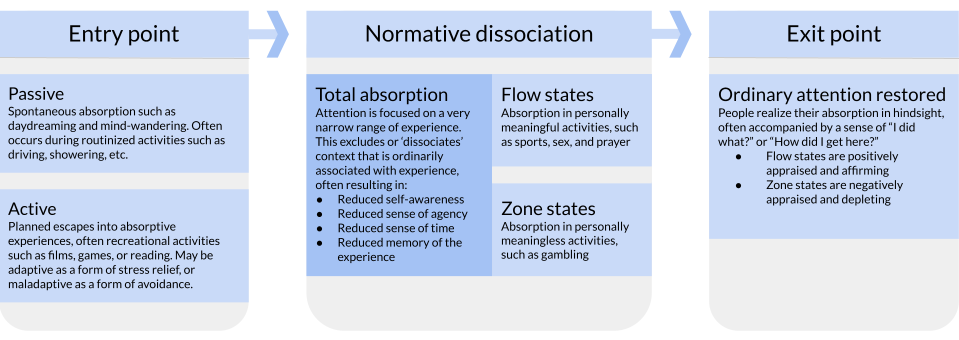

| The ACM CHI Conference on Human Factors in Computing Systems (CHI), 2022 Amanda's presentation UW News coverage Many people have experienced mindlessly scrolling on social media. We investigated these experiences through the lens of normative dissociation. We found that designed interventions--including custom lists, reading history labels, time limit dialogs, and usage statistics--reduced normative dissociation. |

MISC 💡

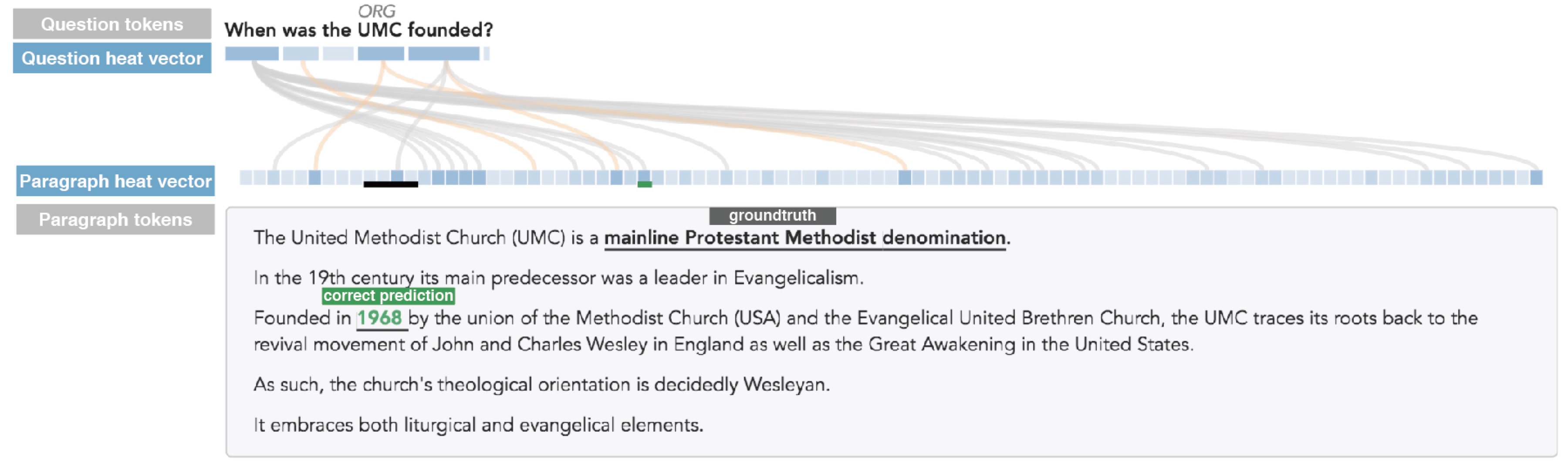

| IEEE Pacific Visualization Symposium (PacificVis), Notes, 2020 We provide an intuitive visualization tool for natural language processing tasks where attention is mapped between documents with imbalanced sizes. We extend the flow map visualization to enhance the readability of the attention-augmented documents. Our project page  |

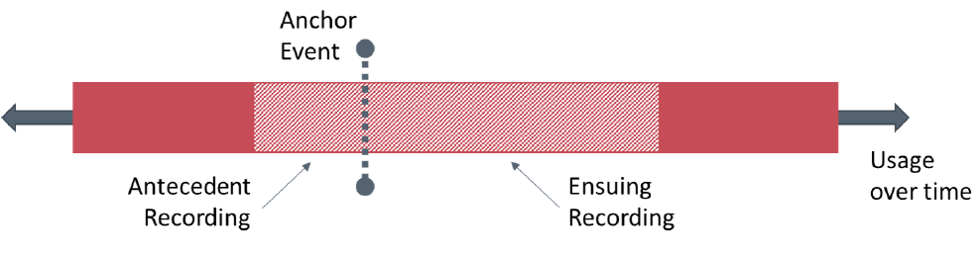

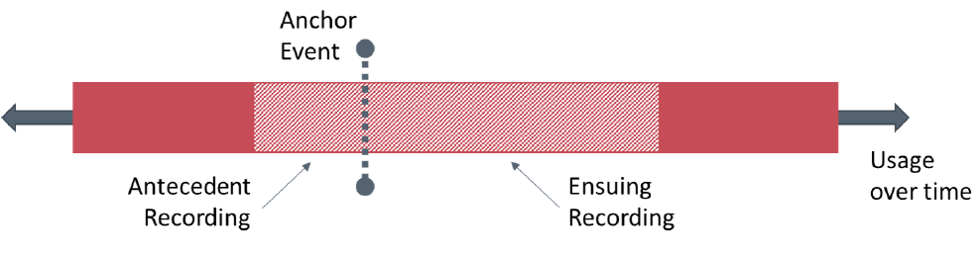

| The ACM CHI Conference on Human Factors in Computing Systems (CHI), 2019  Best Paper Award Best Paper Award We present Anchored Audio Smapling (AAS) method for collecting remote data of qualitative audio samples during field development with young children. The anchor event triggers the recording, and a sliding window surrounding this anchor captures both antecedent and ensuing recording. Our AAS Library for Android |